When a visitor is browsing your website, what impacts their willingness to start a relationship with your organization? The decisions you have made around your site's design, messaging, content, and layout. How confident are you in these decisions? As you update your site, how can you be sure the changes improve your visitors' experience?

A/B Split Tests are a tool for increasing confidence in the decisions you make about your website. They allow you to scientifically test and validate hypotheses around your goals. Google Analytics' "Experiments" tool makes it simple to setup A/B Split Tests on any website using Google Analytics (GA). In this post, we'll walk through each of the following steps.

1. Define your Goal

The first step in setting up a Google Experiment is defining what metric will be used to determine success. The objective of the experiment can be:

- Reducing website bounces

- Increasing website pageviews

- Increasing the time visitors are spending on the site

- A Goal that has been defined in GA

In our experience, usually the objective of the experiment is a specific Goal that's been created in GA. Goals are a big topic of on their own. If this is your first time setting one up, check out this Guide on Setting up Goals in Google Analytics. A few examples of the types of Goals you could track are:

- Tracking supporter engagement and fund raising for nonprofit organizations

- Tracking donations and volunteer registrations for political organizations

- Tracking client leads for law firms

2. Form your A/B Test Hypotheses

With your objective of increasing engagement, or leads, or donations established, it's time to form your hypotheses: what design, messaging, content, or layout decisions do you think could achieve this goal more effectively?

With Google Analytics Experiments, you're not limited to just two, A and B, hypotheses. You can setup as many as you'd like. The algorithm Google uses is called a multi-armed bandit experiment. The statistics behind it are complex, but the idea is not. The gist is, as time goes on and hypotheses start showing promise Google will send more visitors to the leaders. It has two big advantages over a traditional A/B test that distributes traffic evenly until completion. First, by sending more traffic to winning variations while the experiment is running, you start to benefit from the leaders earlier. Fewer visitors are sent to poor performers before the experiment ends. A side effect of this is the other key benefit: deciding the best of multiple good choices happens more quickly.

Each hypothesis must be setup as its own page, with a unique URL, on your website. How this is done will depend on the content management system (CMS) your website uses. You'll want to make sure, however, that the only thing you change in the different pages in your experiment is what you're testing for. You want to avoid changing several aspects of the page and then not being sure which change led to the increased conversion rate.

For instance, let's say you want to test whether reducing the number of fields on your contact form leads to an increase in form submissions. You shouldn't also change the banner image on the two form pages as that could confound the results.

In the above example, the two pages would be exactly the same except on one contact form, you ask for:

- First Name

- Last Name

- How can we help you?

On the other contact form, you ask for only:

- How can we help you?

Once you finish running your first experiement on whether the number of form fields impacts submission rates, you can then set up a new experiment to see if the banner image impacts submission rates.

3. Setup your Google Analytics A/B Split Test Experiment

With a goal and hypotheses in place, it's time to setup your experiment in GA. This is the easy part!

3a. Create Experiment

You can reach your experiments in GA from the reporting sidebar. Select Behavior, then Experiments. This is how you'll get to your experiments after you've set them up. Select Create Experiment.

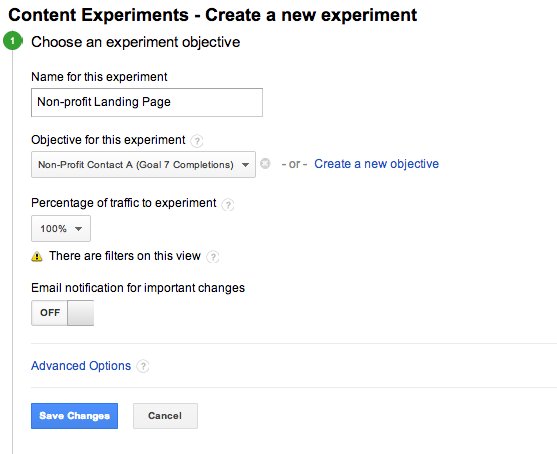

Experiments only require a Name and the Goal you're evaluating success with. There are other options, too, like how much of your traffic should be experimented with, how long the experiment should run, the confidence level with which a winner is determined, and so on. For your first experiments, you can safely use the defaults.

3b. Drop-in Original URL and Hypothesis Variation URLs

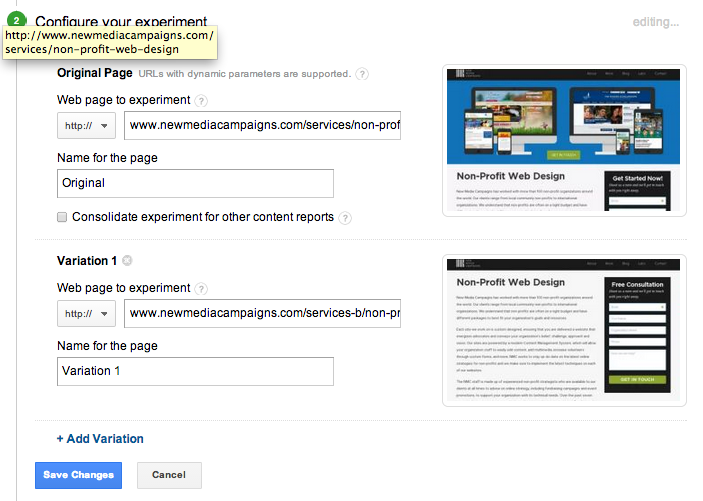

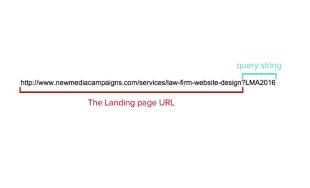

The next step is to add all of your hypotheses to the experiment. This is just a matter of copy and pasting the URLs you setup in. If you are testing changes to a page that already exists, use it as the Original. For entirely new content, pick the URL you will eventually want to use for the winner. The Original Page is where the code snippet will be installed in step 3c.

3c. Insert the experiment Testing Code

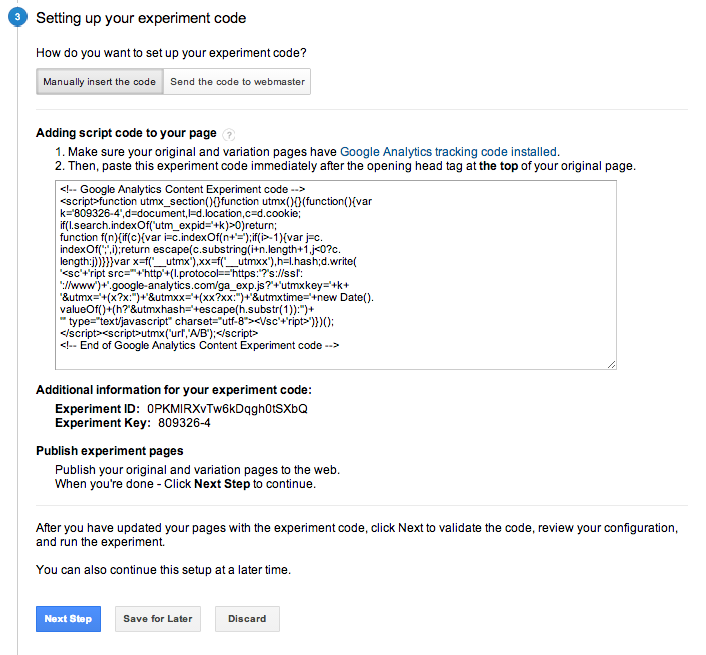

Once your hypotheses URLs are setup, the next step is to select "Manually insert the code" and install the experiment code snippet just inside the <head> tag of your Original Page.

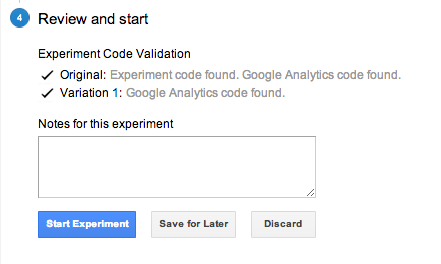

After you have saved the template, click Next Step and GA will verify that your experiment code is properly in place.

All that is left now is to Start Experiment and GA will take care of routing visitors to your hypotheses and recording their effectiveness.

Deciding the Winner

The winning hypothesis will be decided algorithmically over some period of time, usually on the order of 2 to 4 weeks. This period will vary in length depending on the volume of traffic to your site, the relative performance of hypotheses, the rate at which the leading hypotheses converts, and so on.

For experiments that end with a clear winner, if it is not the original, there are just a few more steps to take. First, replace the original's content or template with the winning variation's. Second, remove the experiment code from the template.

For some experiments, you may be able to decide to move forward with a hypothesis before Google's algorithm statistically can. An example of this is a desirable change to your website that is performing the same as the original page it intends to replace and has a low probability of outperforming the original. In these cases, you can manually select Stop Experiment to end it, replace the original's content with the hypothesis', and remove the experiment code.

Google Analytics Experiments is a free A/B Split testing tool you can easily setup to help you make confident decisions about your website's design, messaging, content, and layout. It is a powerful tool you can use to make your website better at achieving your organization's goals.

Comments

JamesJharper

Split testing is a time-consuming effort which might definitely yield great results. However, one requires to wait around 3 to 4 weeks before it will actually produce any effective results. Furthermore, there are chances that these tests can entirely fail as well. So, asking experts such as FWA Marketing will be highly beneficial when it comes to result-driven marketing strategies.John

By what means does Analytics switch between the pages? Does it do this by creating redirects, or some other method? It would be good to have this key issue explained here.Cassineira BR

I didn't like to create two address to make the AB. Optimizely, for example, uses javascript to sort and show the difference. In my opinion is better, because in my company all pages is static generated by an admin (and CacheOS, Varnish, etc).Isn't very simple to bring two different pages. And is important add the link rel cacnonical to avoid duplicated pages in SEO.

I prefer use events instead of this AB.

If you are a javascript developer, you can use this lib.

https://github.com/tacnoman/googleAnalyticsAB

STW Services LLP

This is very helpful article for ab split testing. Easily explain & guide steps.Thanks

STW Services LLP

This is very helpful article for ab split testing. Easily explain & guide steps.Thanks

Nathan

This is going to be very useful to use alongside a PHP split test script i'm using. Just wondering how to deal with both pages using the same url unless I can append with a query. Looks like a little work needed in the PHP script.Cheers

ben

Is it possible to run this a/b test for a specific campaign of traffic source?thanks

Graham

Just realized how the word "should" when used improperly causes the narrative voice to sound like a know-it-all.Leave a comment