WebKit Team Admits Error, Downplays Importance, Re: 'Unacceptable Browser HTTP Accept Headers'

On Friday we published a post describing the HTTP Accept header and WebKit's unfortunate use of it. Using the Accept header the browser can indicate to the server its Content-Type preferences.

Maciej Stochowiak of Apple's WebKit team responded to our post, admitting WebKit's error:

Most WebKit-based browsers (and Safari in particular) would probably do a better job rendering HTML than XHTML or generic XML, if only because the code paths are much better tested. So the Accept header is somewhat in error...

The latest versions of WebKit, and thus Safari and Chrome, prefer XML over HTML in the Accept header. If a server is following the HTTP spec and serving a resource that can be represented as XML or HTML, it will respond with HTML to Firefox and XML to Safari.

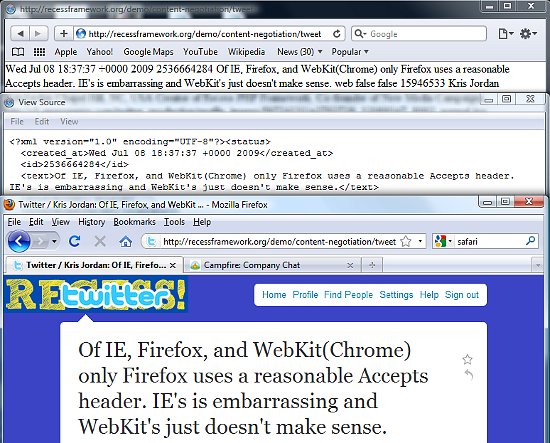

We set up a quick demo of a tweet that can be represented as HTML, XML, JSON, and for fun, a JPG. Try opening http://recessframework.org/demo/content-negotiation/tweet in Firefox and then Safari/Chrome. You'll get just what each browser's Accept header asked for (pictured below). (Aside: For fun, try it in IE and you'll get a JPG. Then hit refresh and you'll get the HTML. Here's why.) (Demo built using the latest GitHub version of Recess, a RESTful PHP Framework. Source code here.)

Why does WebKit's Accept preference of XML over HTML matter?

Maciej of Apple's WebKit team goes on in his response to downplay the chosen Accept header's importance:

On the other hand, this isn't a hugely important bug, [...] since content negotiation is not really used much in the wild.

Maciej, your transparency and admission of the error is appreciated, but your assesment of WebKit's Accept header importance is wrong. Content-type negotiation has not seen used much in the wild for two reasons:

- Server-side software working against the grain of the HTTP spec made it historically difficult.

- Web browsers improperly using the Accept header made it historically worthless.

On point 1, HTTP 1.1 is now 10 years old and in the past couple of years Fielding's REST movement has gained momentum. Server-side implementation is no longer a hard problem: most servers and frameworks can handle content-negotiation just fine.

On point 2, of modern browsers WebKit is the last one blocking the primary use case for content-type negotiation that today's web apps have: representing resources as either HTML or XML. Even though Internet Explorer's Accept header is full of garbage, its sins result in wasted resources and a performance penalty, not incorrectness. WebKit's Accept header results in web developers choosing between HTTP incorrectness or a bad user experience. Follow the HTTP spec and your users will get XML dumps as demo'd, or do not follow the HTTP spec and roll your own one-off content-negotiation protocol. The choice is usually simple: user experience is more valuable than developer experience and adherence to the web's most important RFC.

Why does WebKit use this Accept header?

The final pieces to Maciej's response identify the origin of WebKit's Accept header as Firefox's old Accept header:

...we design our Accept header mainly to give the best compatibility on Web sites [...] Our current header was copied from an old version of Firefox.

Firefox 3.0 and 3.5, tested in our previous post, have reasonable Accept headers. Maciej is right, though, Firefox 2.0 looks to have had a particularly bad Accept header:

text/xml,application/xml,application/xhtml+xml, text/html;q=0.9,text/plain;q=0.8,image/png,*/*;q=0.5

Just like WebKit's, Firefox 2.0 prefers XML, XHTML, and PNG, over HTML. WebKit must have copied Firefox's Accept header on multiple occasions, judging from this year old commit which removes the antiquated 'text/xml' type from WebKit's list.

Firefox 2 sent "text/xml" but in an acceptable order for the web server. And Firefox 3 doesn't send "text/xml" at all in the Accept header since it is being deprecated in favor of "application/xml". We decided that the best solution is to match Firefox 3 and stop sending "text/xml" in the Accept header.

In all this copying of Firefox's Accept header the WebKit team missed the forest for the trees. WebKit's team knows quite well it "would probably do a better job rendering HTML than XHTML or generic XML, if only because the code paths are much better tested" but failed to express this in its Accept header. Fortunately, it's an easy fix.

The Fix: Change this String at Line 200 of FrameLoader.cpp

On line 200 of WebCore/loader/FrameLoader.cpp resides the string that causes this pain:

static const char defaultAcceptHeader[] = "application/xml,application/xhtml+xml,text/html;q=0.9,text/plain;q=0.8,image/png,*/*;q=0.5";

WebKit could make amends with web developers wanting to use HTTP content-negotiation to provide XML and HTML representations of their resources without crippling the end-user experience by changing their default Accept header in one of two ways:

- Keep up the status quo and copy Firefox 3.5's reasonable Accept header:

text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 - Live a little, give preference to your browser's kick ass HTML abilities, and save some bandwidth:

text/html,*/*

The error has been entered to WebKit's Bugzilla tracker here. I will post updates to this blog (RSS feed) regarding the status of WebKit's fix as they come. We can only hope that, in time, we'll be able to use the Accept header as it is specified and make the internet a slightly happier, more RESTfullier place.

Comments

Jamie Thompson

Apologies for resurrecting an old post, but one I felt I should comment on as I was just surprised by it.I'm currently updating my site that I built 5 years ago. I built it to use content negotiation extensively.

The system I built has every document as a XML resource, with a stylesheet specified that transforms it to XHTML.

If the browser supports XSLT, as Firefox 2 (current when I built the site) used to prefer, the XML is served and the client transforms it.

If the client prefers text/html, then the transformation is performed server-side and the result served.

Explicit formats can be obtained by requesting the resource with a file extension:

Namely /news is the resource, /news.xml will serve the xml, and /news.html will serve the transformed content. The actual resources involved are news.xml.pxml and news.html.phtml (which is just a place holder containing a fixed redirect to the requested resource in lieu of a server module that can do this automatically). Only hack is that I have to check for IE which claims it supports everything (which we both know it doesn't) and force HTML.

Works brilliantly, but I just discovered Firefox has been requesting the transformed content for the last few years due to the accept header change you mention :(

Sebastien Lambla

This problem is only relevant when you dont give priorities on the server side to your media types. In apache you can use the qs mediatype parameter, while openrasta uses the typical q. By default, openrasta also gives lower priority to app/xml when sorting them, which solves the problem nicely, including with IE.

Mike

I don't really like either of the solutions which use the URI, but the query parameter solution seems to make more sense. There are, however, headaches associated with using query param URIs and the effect that has on caching - most will simply not cache them.

HTTP content negotiation (i.e. the spec) isn't broken or insufficient, but I agree that implementations are poor. The topic of these posts is a good example of this, where incompetence from browsers is rendering the Accept header virtually useless.

Kris also made a good point on HTML hyperlinks, although the type attribute (I believe) was included as merely a 'suggestion' to the HTML client about the content-type that should be expected from the URI, rather than as an indicator of preference for the outgoing request.

I suggested adding an accept="" attribute to HTML5 hyperlink tags to solve this problem, however the working group didn't agree that HTTP conneg should be "allowed" because it's "broken", and "that's what the ends of URIs are for".

This is a frustratingly poor, and silly, argument since the reason URIs are used is not because they are a superior solution or less complex to implement - but actually because HTML markup and browsers provide no mechanisms to leverage HTTP conneg; which is pretty poor considering they are HTTP clients.

These kinds of fixes and additions would not brake or interfere with current practices of using the URI - the 2 could exist together quite happily. What it would do, however, is liberate systems developers to choose what is the best solution for a given context, rather than being railroaded into what the HTML5 working group and browser vendors *hypothesize* is 'best practice' and 'best for users'.

Matthew Markus

Kris,

I think that a type's file extension is usually declared in its standards document (e.g. RFC). Here is a relatively good cheatsheet:

http://docs.amazonwebservices.com/AWSImportExport/2009-05-20/DG/index.html?FileExtensiontoMimeTypes.html

Believe it or not, I too am concerned about URI opacity. In fact, the one reason why I shied away from the "?mimeType=" solutions was the lack of a canonical form for query strings which makes a byte-to-byte comparison of two URIs impossible. Hence, my support for the defacto ".{ext}" solution.

Kris Jordan

Great discussion Matthew and Mike. I am on Mikes side in his assessment of URIs -> resources. A URI points to a single resource and resources can have multiple representations. By the HTTP spec the mechanism for obtaining one representation or another is through the Accept header using a list of media/MIME-types. Mike captures the upsides in staying true to the spec in this regard.

Matthews pragmatism, pointing out "out of band, confusing, and poorly implemented HTTP content negotiation", has its merits, too. Browsers do not follow the "type" attribute on "a" tags, for example, so there is no way to link directly to a resource and type from within a web page where Accept is the only selection mechanism. For this reason, in Recess I did choose to implement the .{ext} extension that overrides the Accept header. (So, for example, in any browser tack on .xml, .html, .json, .jpg to the demo URL and you will get that type back: http://recessframework.org/demo/content-negotiation/tweet ) This is the way many others have done it, but honestly your suggestion of using the query string feels a lot less dirty.

Mike, what are your thoughts on the fall-back to "Accept: text/html", being a query parameter like "?header[accept]=text/html" vs, http://foo.html Do intermediaries cache GETs to resources with the same query strings?

One other point to call out is that there is no standard, that I know of, for mapping between these 3/4 letter extensions and MIME-types. IANA being the authoritative body only defines MIME-types: http://www.iana.org/assignments/media-types/

Matthew Markus

Mike, thanks for the post. After reading it, I realized just how far I have moved from Fielding's description of REST. I wouldn't say that I'm STREST-ed either, though (pun intended). I would like to briefly comment on one point you made:

"The reason I claim this is because URIs are intended to be opaque; that is to say that there is no standard anywhere that implies a relationship between the resources /tweet/123.xml and /tweet/123.json - and therefore a PUT to /tweet/123.xml does not infer a PUT to /tweet/123.json."

I would like to declare that perhaps there should be a standard to imply such a relationship. The query string of a URI is already considered to be semi-opaque [1]. In fact, some have suggested that this is the ideal place to specify a format [2]:

http://example/apple?mimeType={mime-type}

Personally, I prefer the defacto standard of ".{ext}" with a mapping of extensions to mime-types. Enforcing such a simple standard seems a much better path to go down than relying on the out-of-band, confusing, and poorly implemented HTTP content negotiation specification, IMHO. This is especially true when changes in human context can cause one resource with two representations (e.g., an apple) to suddenly appear as two resources, each with its own representation (e.g., a copyrighted GIF of an apple vs. a public domain XML description of an apple).

[1] http://rest.blueoxen.net/cgi-bin/wiki.pl?OpacityMythsDebunked

[2] http://www.xml.com/lpt/a/1459

Mike

Matthew, the case you detail is an example of two separate <b><i>resources</i></b> - therefore they quite rightly require two separate URIs.

It would definitely be naive to treat them as the same resource.

However, there are many other cases where one resource may actually have multiple representations. Kris provided an example of a tweet, which is an excellent example because the xml, json, and html are all representations of the same 'tweet' resource - a PUT of any of these <b>representations</b> will 'update' the others. This is the nature of <b><i>Uniform</i></b> Resource Identifiers, and resource representations. HTTP content negotiation (in this case content-type negotiation via the Accept header) is designed specifically for this purpose.

There are significant benefits to this approach - the primary being that messages to tweet resources are far more self descriptive. This allows for uniform and decoupled <b><i>layering</i></b> via intermediaries.

The reason I claim this is because URIs are intended to be opaque; that is to say that there is no standard anywhere that implies a relationship between the resources /tweet/123.xml and /tweet/123.json - and therefore a PUT to /tweet/123.xml does not infer a PUT to /tweet/123.json.

This is extremely important when it comes to introducing intermediaries such as caches into your system. How is an intermediary cache supposed to know whether PUT /tweet/123.xml also invalidates the cache for /tweet/123.json? It can't; without you teaching it about, and tightly coupling it to, your system. This is a <b>very bad thing</b> to introduce to a large, distributed system.

Layering/Caching is a key part of REST; self-descriptive messages and uniform resource identifiers are a key enabler of this.

Cheers,

Mike

http://twitter.com/mike__kelly

Matthew Markus

"WebKit's Accept header results in web developers choosing between HTTP incorrectness or a bad user experience. Follow the HTTP spec and your users will get XML dumps as demo'd, or do not follow the HTTP spec and roll your own one-off content-negotiation protocol."

It is hard to talk about this issue in 140 characters, so here are my thoughts...

The naive use of Accept is non-RESTful because it renders external provenance data useless or wrong. For example, suppose there is a resource, http://example.com/apple, that has a XML representation and a HTML representation. Furthermore, assume the XML representation was authored by Jon and the HTML representation was authored by Jake. Now, suppose I tweet something like the following:

"check out Jon's work @ http://example/apple"

If you dereference the above URI in Firefox you'll actually be looking at Jake's work. This means I need to send a second piece of state information (almost like a cookie) along with my tweet, like so:

"check out Jon's work @ http://example/apple, but first set your browser to application/xml"

Clearly, this is a step backward compared with something like:

"check out Jon's work @ http://example/apple.xml"

In fact, the trouble with WebKit's Accept header is not that it is wrong, just that it is unexpected. That is, WebKit's behavior is dependent upon a piece of shared state embedded across all WebKit clients and, furthermore, that shared state is different than the shared state embedded across all Firefox clients. None of this sounds RESTful to me.

Leave a comment